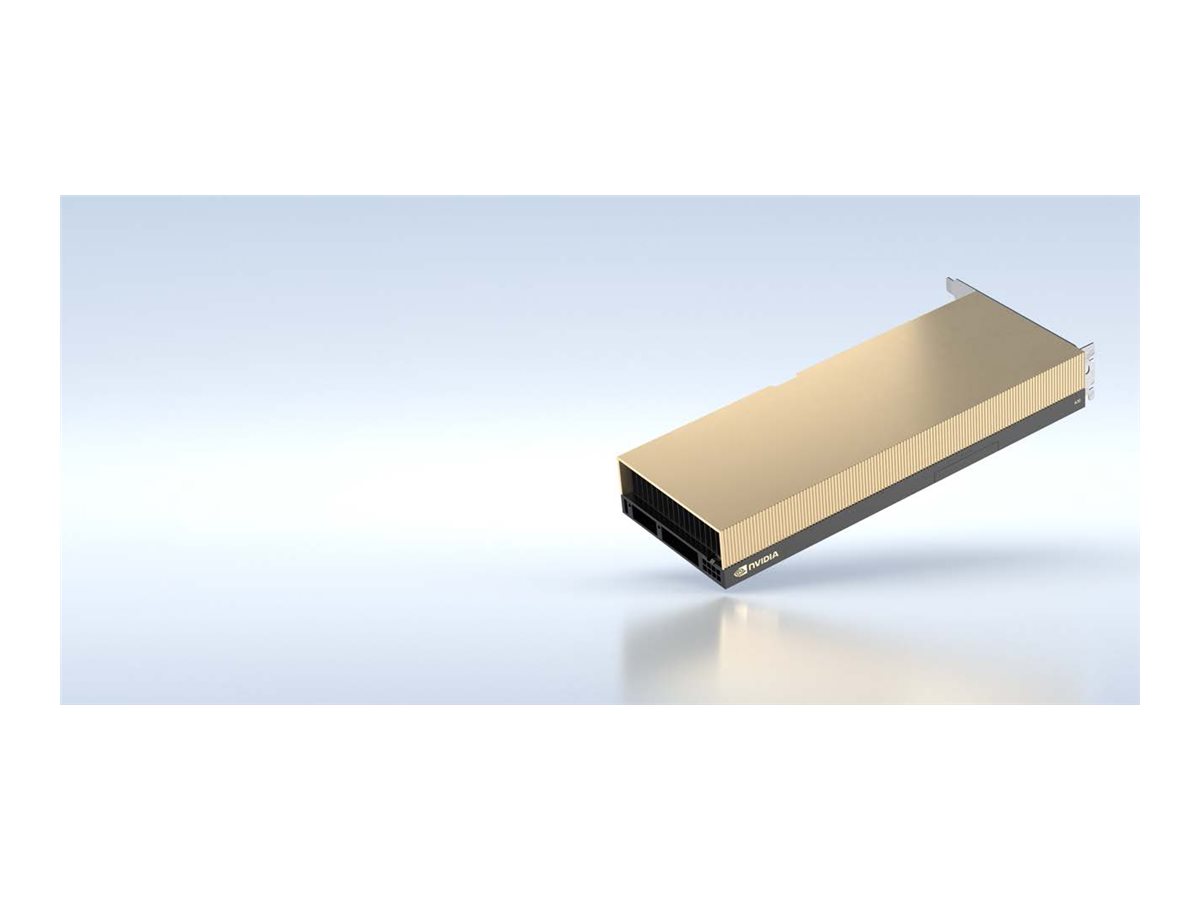

Accelerate any business workload with the high-performance NVIDIA A30 Tensor Core GPU. Based on the powerful Tensor cores of the NVIDIA Ampere architecture and MIG multi-instance GPU technology, the A30 GPU delivers significant performance gains to more efficiently handle a wide variety of workloads that include scalable AI-based inference workflows and high-performance computing (HPC) applications. Featuring ultra-fast memory bandwidth and low power consumption, as well as a PCIe form factor optimized for general-purpose servers, the A30 enables the implementation of elastic data centers and offers an excellent return on investment to companies that acquire it.

High-performance data center solution for modern infrastructures

The NVIDIA Ampere architecture is an essential component of the NVIDIA EGX unified platform, bringing together a complete set of hardware and software solutions, networking systems, libraries, AI models and optimized applications available via the NVIDIA NGC catalog. Dedicated to AI and HPC, this end-to-end Data Center platform is the most powerful on the market. As a result, researchers worldwide can achieve concrete results faster and deploy highly scalable production solutions.

Deep Learning training

As AI models grow in complexity, and challenges such as designing new conversational AI applications emerge, they require ever greater scalability and computing power. The A30 GPU's Tensor cores and TF32 (Tensor Float 32) computational capabilities give you up to 10 times the performance of the NVIDIA T4 GPU without any change to the source code, and double the efficiency thanks to NVIDIA's automatic mixed precision technology and FP16 computation support, helping to increase overall efficiency by up to 20 times. But that's not all: the combination of NVIDIA NVLink technology, PCIe Gen4, NVIDIA Mellanox networking solutions and the NVIDIA Magnum IO development kit allows you to interconnect several thousand GPUs for phenomenal computing power. Tensor cores and MIG technology enable the NVIDIA A30 GPU to handle a wide variety of workloads throughout the day, in a totally dynamic way. The A30 can handle full inference when production demands are highest, while only a fraction of the GPU's resources can be used to train AI models during off-peak hours.

High-performance computing

To make new scientific discoveries, researchers today rely on advanced simulations to help them better understand the world around them. Thanks to the Tensor cores of the Ampere architecture for FP64 calculations, the NVIDIA A30 GPU represents the most important technological advance since the introduction of GPUs for high-performance computing. Armed with large GPU memory and 933 GB/s bandwidth, researchers can perform double-precision calculations faster. HPC applications can now take advantage of the A30 GPU's TF32 computing capabilities to accelerate single-precision matrix multiplication operations. The combination of Tensor FP64 cores and MIG technology allows research institutions to securely partition the GPU to enable multiple researchers to access computational resources with guaranteed quality of service and maximum GPU utilization. Companies deploying AI can use the inference capabilities of the NVIDIA A30 GPU during peak periods, then dynamically reconfigure compute servers to run HPC or AI training workloads during off-peak hours.

Designed for enterprise use

The A30 and Multi-Instance GPU (MIG) technology maximize the use of GPU-accelerated infrastructures like never before. MIG enables an A30 GPU to be securely partitioned into four separate instances, giving multiple users access to all the benefits of GPU acceleration. Fully compatible with the Kubernetes platform, container systems and hypervisor-based server virtualization solutions, MIG technology enables infrastructure managers to implement GPUs perfectly calibrated for each task with optimal quality of service, simplifying access to computing resources for all users.